The Filecoin Piece

-

State

stable

-

Theory Audit

n/a

-

Edit this section

-

section-systems.filecoin_files.piece

-

State

stable -

Theory Audit

n/a - Edit this section

-

section-systems.filecoin_files.piece

The Filecoin Piece is the main unit of negotiation for data that users store on the Filecoin network. The Filecoin Piece is not a unit of storage, it is not of a specific size, but is upper-bounded by the size of the Sector. A Filecoin Piece can be of any size, but if a Piece is larger than the size of a Sector that the miner supports it has to be split into more Pieces so that each Piece fits into a Sector.

A Piece is an object that represents a whole or part of a File,

and is used by Storage Clients and Storage Miners in Deals. Storage Clients hire Storage Miners to store Pieces.

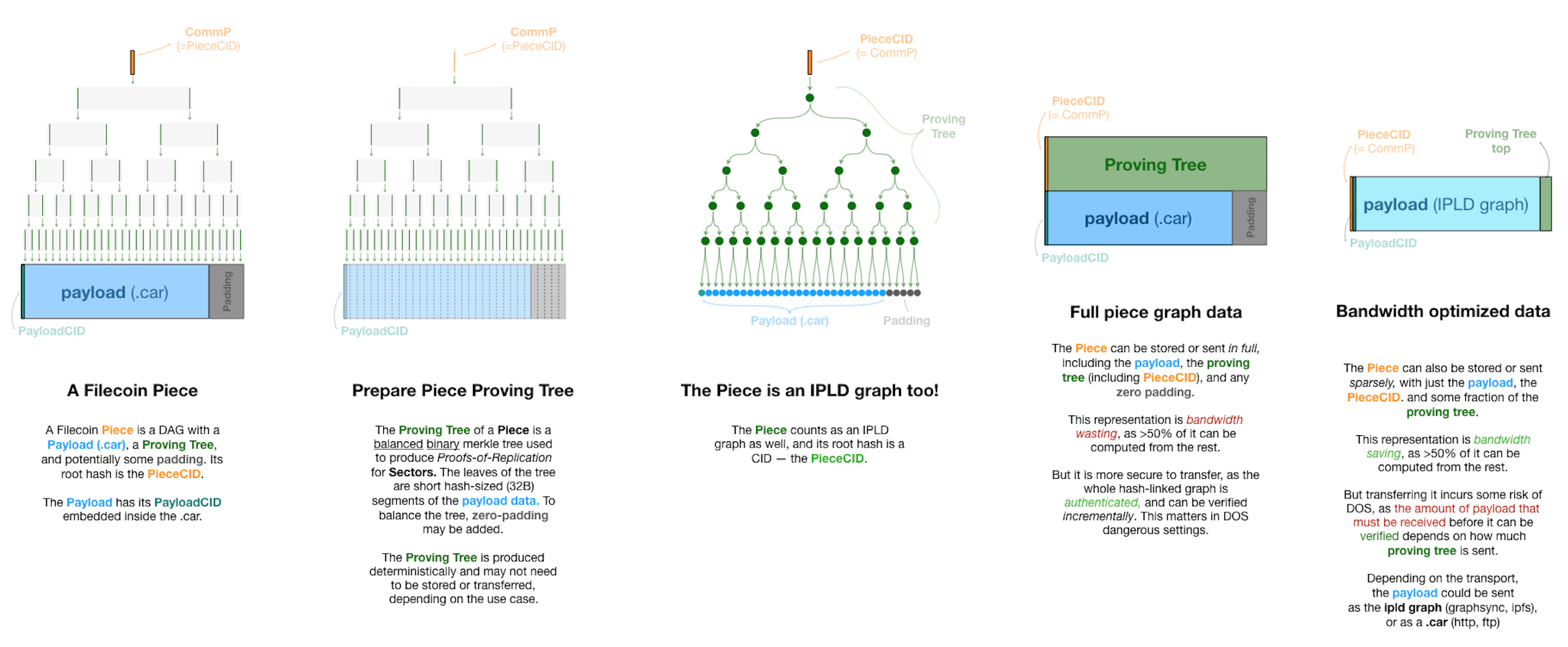

The Piece data structure is designed for proving storage of arbitrary IPLD graphs and client data. This diagram shows the detailed composition of a Piece and its proving tree, including both full and bandwidth-optimized Piece data structures.

Data Representation

-

State

stable

-

Theory Audit

n/a

-

Edit this section

-

section-systems.filecoin_files.piece.data-representation

-

State

stable -

Theory Audit

n/a - Edit this section

-

section-systems.filecoin_files.piece.data-representation

It is important to highlight that data submitted to the Filecoin network go through several transformations before they come to the format at which the StorageProvider stores it.

Below is the process followed from the point a user starts preparing a file to store in Filecoin to the point that the provider produces all the identifiers of Pieces stored in a Sector.

The first three steps take place on the client side.

-

When a client wants to store a file in the Filecoin network, they start by producing the IPLD DAG of the file. The hash that represents the root node of the DAG is an IPFS-style CID, called Payload CID.

-

In order to make a Filecoin Piece, the IPLD DAG is serialised into a “Content-Addressable aRchive” (.car) file, which is in raw bytes format. A CAR file is an opaque blob of data that packs together and transfers IPLD nodes. The Payload CID is common between the CAR’ed and un-CAR’ed constructions. This helps later during data retrieval, when data is transferred between the storage client and the storage provider as we discuss later.

-

The resulting .car file is padded with extra zero bits in order for the file to make a binary Merkle tree. To achieve a clean binary Merkle Tree the .car file size has to be in some power of two (^2) size. A padding process, called

Fr32 padding, which adds two (2) zero bits to every 254 out of every 256 bits is applied to the input file. At the next step, the padding process takes the output of theFr32 paddingprocess and finds the size above it that makes for a power of two size. This gap between the result of theFr32 paddingand the next power of two size is padded with zeros.

In order to justify the reasoning behind these steps, it is important to understand the overall negotiation process between the StorageClient and a StorageProvider. The piece CID or CommP is what is included in the deal that the client negotiates and agrees with the storage provider. When the deal is agreed, the client sends the file to the provider (using GraphSync). The provider has to construct the CAR file out of the file received and derive the Piece CID on their side. In order to avoid the client sending a different file to the one agreed, the Piece CID that the provider generates has to be the same as the one included in the deal negotiated earlier.

The following steps take place on the StorageProvider side (apart from step 4 that can also take place at the client side).

-

Once the

StorageProviderreceives the file from the client, they calculate the Merkle root out of the hashes of the Piece (padded .car file). The resulting root of the clean binary Merkle tree is the Piece CID. This is also referred to as CommP or Piece Commitment and as mentioned earlier, has to be the same with the one included in the deal. -

The Piece is included in a Sector together with data from other deals. The

StorageProviderthen calculates Merkle root for all the Pieces inside the Sector. The root of this tree is CommD (aka Commitment of Data orUnsealedSectorCID). -

The

StorageProvideris then sealing the sector and the root of the resulting Merkle root is the CommRLast. -

Proof of Replication (PoRep), SDR in particular, generates another Merkle root hash called CommC, as an attestation that replication of the data whose commitment is CommD has been performed correctly.

-

Finally, CommR (or Commitment of Replication) is the hash of CommC || CommRLast.

IMPORTANT NOTES:

Fr32is a 32-bit representation of a field element (which, in our case, is the arithmetic field of BLS12-381). To be well-formed, a value of typeFr32must actually fit within that field, but this is not enforced by the type system. It is an invariant which must be perserved by correct usage. In the case of so-calledFr32 padding, two zero bits are inserted ‘after’ a number requiring at most 254 bits to represent. This guarantees that the result will beFr32, regardless of the value of the initial 254 bits. This is a ‘conservative’ technique, since for some initial values, only one bit of zero-padding would actually be required.- Steps 2 and 3 above are specific to the Lotus implementation. The same outcome can be achieved in different ways, e.g., without using

Fr32bit-padding. However, any implementation has to make sure that the initial IPLD DAG is serialised and padded so that it gives a clean binary tree, and therefore, calculating the Merkle root out of the resulting blob of data gives the same Piece CID. As long as this is the case, implementations can deviate from the first three steps above. - Finally, it is important to add a note related to the Payload CID (discussed in the first two steps above) and the data retrieval process. The retrieval deal is negotiated on the basis of the Payload CID. When the retrieval deal is agreed, the retrieval miner starts sending the unsealed and “un-CAR’ed” file to the client. The transfer starts from the root node of the IPLD Merkle Tree and in this way the client can validate the Payload CID from the beginning of the transfer and verify that the file they are receiving is the file they negotiated in the deal and not random bits.

PieceStore

-

State

stable

-

Theory Audit

n/a

-

Edit this section

-

section-systems.filecoin_files.piece.piecestore

-

State

stable -

Theory Audit

n/a - Edit this section

-

section-systems.filecoin_files.piece.piecestore

The PieceStore module allows for storage and retrieval of Pieces from some local storage. The piecestore’s main goal is to help the

storage and

retrieval market modules to find where sealed data lives inside of sectors. The storage market writes the data, and retrieval market reads it in order to send out to retrieval clients.

The implementation of the PieceStore module can be found here.